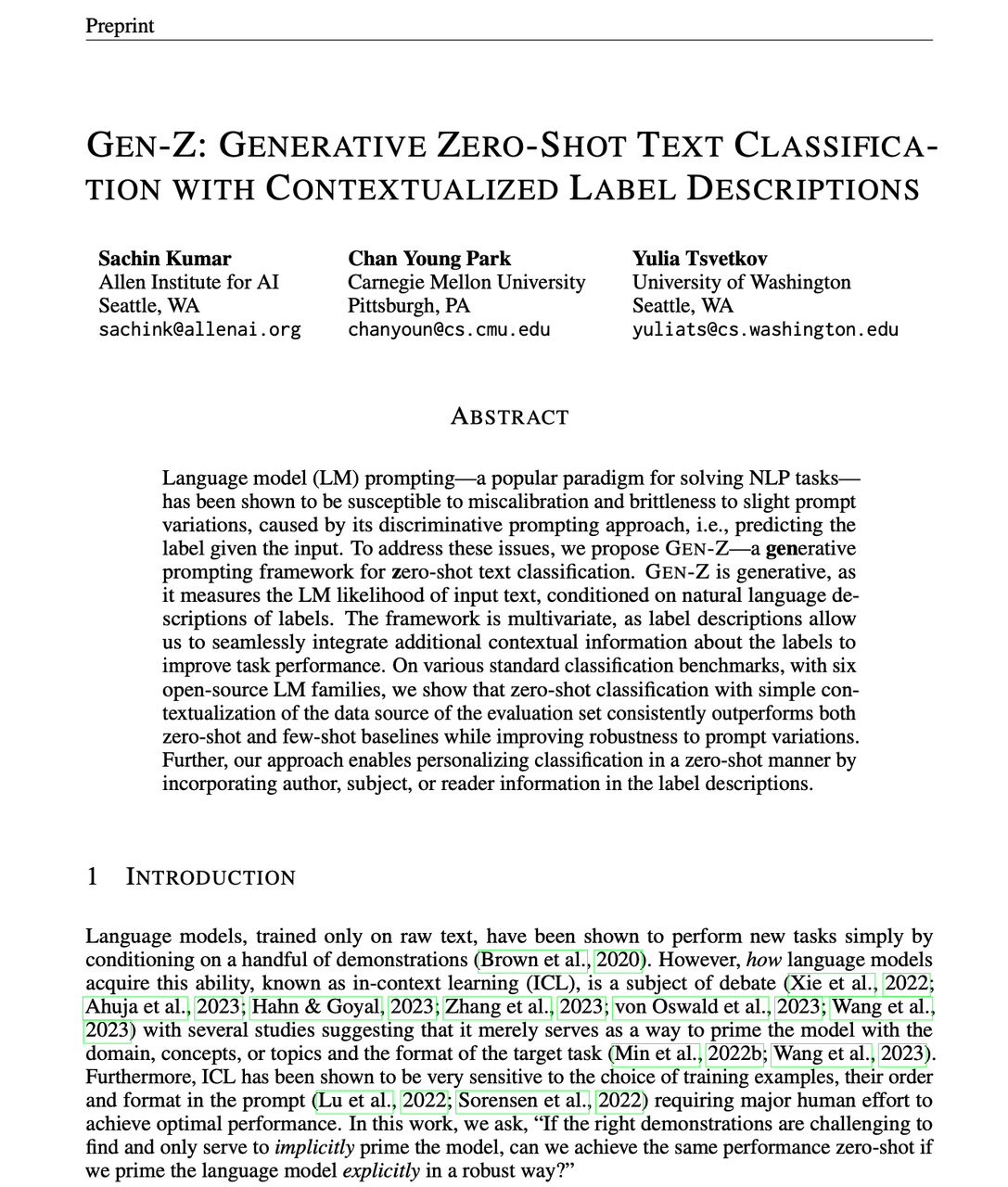

"文档原文":"Language models, trained only on raw text, have been shown to perform new tasks simply by conditioning on a handful of demonstrations (Brown et al., 2020). However, how language models acquire this ability, known as in-context learning (ICL), is a subject of debate (Xie et al., 2022; Ahuja et al., 2023; Hahn & Goyal, 2023; Zhang et al., 2023; von Oswald et al., 2023; Wang et al., 2023) with several studies suggesting that it merely serves as a way to prime the model with the domain, concepts, or topics and the format of the target task (Min et al., 2022b; Wang et al., 2023). Furthermore, ICL has been shown to be very sensitive to the choice of training examples, their order and format in the prompt (Lu et al., 2022; Sorensen et al., 2022) requiring major human effort to achieve optimal performance. In this work, we ask, “If the right demonstrations are challenging to find and only serve to implicitly prime the model, can we achieve the same performance zero-shot if we prime the language model explicitly in a robust way?” We introduce GEN-Z, a robust zero-shot generative prompting framework for text classification (Figure 1) which achieves results on par with in-context learning with much better stability in performance. ";

"翻译结果":"仅接受原始文本训练的语言模型已被证明可以通过简单地根据少量演示进行条件化来执行新任务(Brown等人,2020年)。然而,语言模型如何获得这种被称为上下文学习(ICL)的能力,这是一个有争议的话题(Xie等人,2022年;Ahuja等人,2023年;Hahn和Goyal,2023年;Zhang等人,2023年;von Oswald等人,2023年;Wang等人,2023年),一些研究表明,它仅仅作为一种用领域、概念或主题以及目标任务的格式来启动模型的方式(Min等人,2022b;Wang等人,2023年)。此外,ICL已被证明对训练示例的选择、它们在提示中的顺序和格式非常敏感(Lu等人,2022年;Sorensen等人,2022年),需要大量的人工工作才能达到最佳性能。在这项工作中,我们问道:“如果难以找到正确的演示,而它们仅仅用来隐含地启动模型,那么我们能否通过以稳健的方式显式启动语言模型来实现相同的零样本性能?”我们介绍了GEN-Z,这是一个用于文本分类的稳健的零样本生成提示框架(图1),它实现了与上下文学习相当的结果,同时具有更好的性能稳定性。我们的方法包括两个关键思想。";

润色后结果展示:

润色后结果展示: